Cavity test with mpi

|  |  |  |

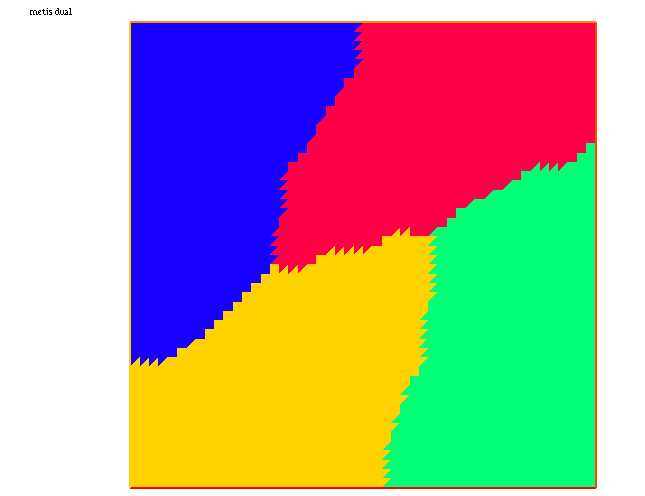

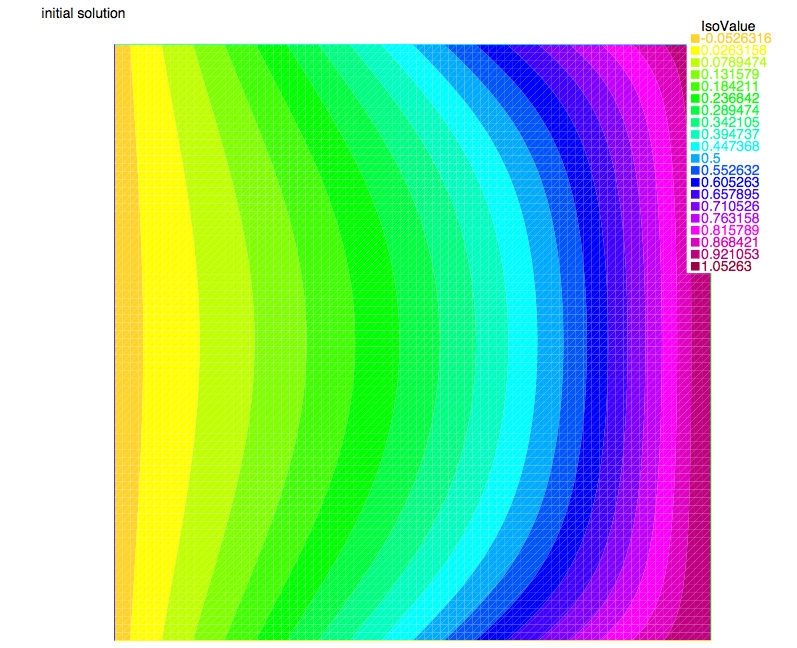

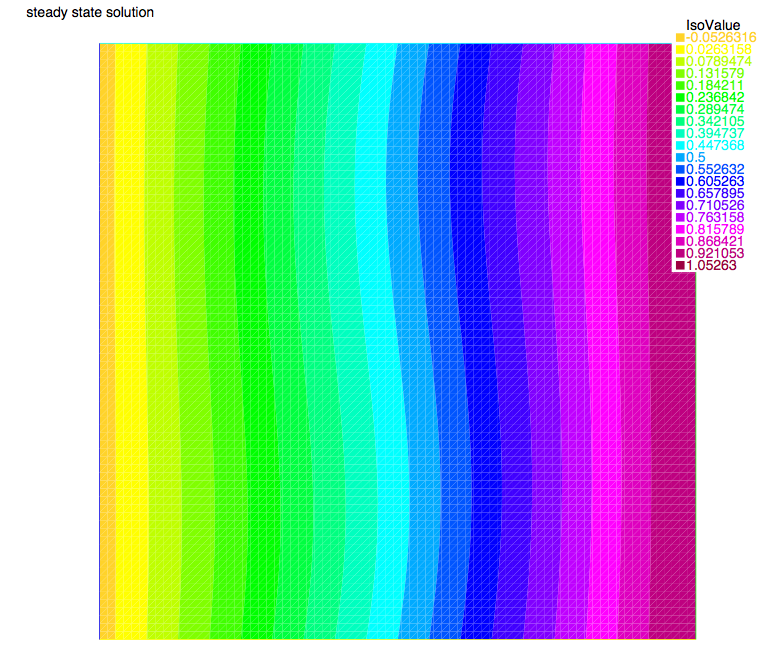

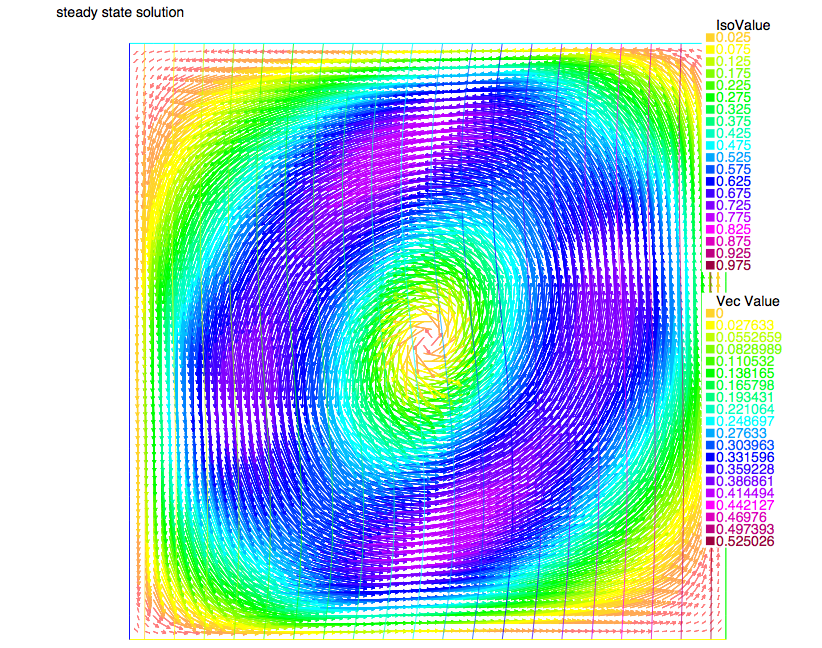

| Metis mesh partition | Initial T | Final T | Final V |

info pdf file Paraview screenshot png file

Run example using ff-mpirun -np 4 DDM-cavityconv-2d.edp -ns -gmres 3

Thanks to Giuseppe Pitton giuseppe.pitton@gmail.com

download example: DDM-cavityconv-2d.edp and idp files DDM-Schwarz-macro-cavity.idp, DDM-funcs-v2-cavity.idp or return to main page

// NBPROC 2

// ff-mpirun -np 4 DDM-cavityconv-2d.edp -glut ffglut -n 11 -k 1 -d 1 -ns -gmres 1

/*

a first true parallele example fisrt freefem++

Ok up to 200 proc for a Poisson equation..

See the Doc for full explaiantion

F Hecht Dec. 2010.

Modified by G. Pitton Aug 2013 for natural convection in cavity.

Please note that having inflow and outflow on the same subdomain boundary

may lead to an ill-posed problem, hence some care is required when decomposing

the domain. This script is intended only as demonstration for coupled

problems in DDM framework.

-------------------

usage :

mpirun -np [cpu number] FreeFem++-mpi DDM-cavityconv-2d.edp [-glut ffglut] [-n N] [-k K] [-d D] [-ns] [-gmres [0|3]

or:

ff-mpirun [mpi parameter] DDM-cavityconv-2d.edp [-glut ffglut] [-n N] [-k K] [-d D] [-ns] [-gmres [0|1]

argument:

-glut ffglut : to see graphicaly the process

-n N: set the mesh cube split NxNxN

-d D: set debug flag D must be one for mpiplot

-k K: to refined by K all elemnt

-ns: reomove script dump

-gmres 0 : use iterative schwarz algo.

1 : Algo GMRES on residu of schwarz algo.

2 : DDM GMRES

3 : DDM GMRES with coarse grid preconditionner (Good one)

*/

load "MPICG"

load "iovtk"

load "medit"

load "metis"

include "getARGV.idp"

include "DDM-Schwarz-macro-cavity.idp"

include "AddLayer2d.idp"

include "DDM-funcs-v2-cavity.idp"

searchMethod=0; // more safe seach algo (warning can be very expensive in case lot of ouside point)

// 0 by default

assert(version >=3.11);

real[int] ttt(10);int ittt=0;

macro settt {ttt[ittt++]=mpiWtime();}//

verbosity=getARGV("-vv",0);

int vdebug=getARGV("-d",1);

int ksplit=getARGV("-k",1);

int nloc = getARGV("-n",25);

string sff=getARGV("-p","");

int gmres=getARGV("-gmres",3);

int nC = getARGV("-N" ,max(nloc/10,4));

bool RAS=1;

int sizeoverlaps=1; // size of overlap

int[int] ffordervel=[0]; // used to save results in vtk format

if(mpirank==0 && verbosity)

cout << " vdebug: " << vdebug << " kspilt "<< ksplit << " nloc "<< nloc << " sff "<< sff <<"."<< endl;

string sPk="P2-Stokes-2gd";

func Pk=[P2,P2,P1]; /* this must be compliant with the VhC and Whi spaces definition */

//int Pknbcomp=3;

func bool plotMPIall(mesh &Th,real[int] & u,string cm)

{if(vdebug) PLOTMPIALLU(mesh,Pk, defPk3, Th, u, allu, { cmm=cm,dim=2,fill=1,value=1}); return 1;}

func bool plotMPIallp(mesh &Th,real[int] & u,string cm)

{if(vdebug) PLOTMPIALLU(mesh,Pk, defPk3, Th, u, allu2, { cmm=cm,dim=2,fill=1,value=1}); return 1;}

mpiComm comm(mpiCommWorld,0,0);// trick : make a no split mpiWorld

int ipart= mpiRank(comm); // current partition number

if(ipart==0) cout << " Final N=" << ksplit*nloc << " nloc =" << nloc << " split =" << ksplit << endl;

int[int] l111=[1,2,3,4];

settt

mesh Thg=square(nloc,nloc,[1*x,y],label=l111); /* global mesh, will be split later */

mesh ThC=square(nC,nC,[1*x,y],label=l111); // Coarse Mesh

fespace VhC(ThC,[P2,P2,P1]); /* velocity space for the coarse problem */

fespace QhC(ThC,P2); /* temperature space for the coarse problem */

VhC [UU,VV,PP];

/* start partitioning for the velocity problem */

BuildPartitioning(sizeoverlaps,mesh,Thg,Thi,aThij,RAS,pii,jpart,comm,vdebug,Vhi,Phg,Vhg)

if(ksplit>1)

{

for(int jp=0;jp<jpart.n;++jp)

aThij[jp] = trunc(aThij[jp],1,split=ksplit);

Thi = trunc(Thi,1,split=ksplit);

}

BuildTransferMat(ipart,mesh,Pk,3,[0,1,-1],Thi,Whi,Whij,Thij,aThij,Usend,Vrecv,jpart,vdebug,Pii,sMj,rMj)

/* end partitioning for the velocity problem */

/* start partitioning for the thermal problem */

BuildPartitioning(sizeoverlaps,mesh,Thg,TThi,aTThij,RAS,Tpii,Tjpart,comm,vdebug,TVhi,TPhg,TVhg)

if(ksplit>1)

{

for(int jp=0;jp<Tjpart.n;++jp)

aTThij[jp] = trunc(aTThij[jp],1,split=ksplit);

TThi = trunc(TThi,1,split=ksplit);

}

/* on BuildTransferMat, be sure that [P2] is compliant with the QhC and Qhi spaces definition */

BuildTransferMat(ipart,mesh,[P2],1,[0],TThi,Qhi,Qhij,TThij,aTThij,Tsend,Qrecv,Tjpart,vdebug,TPii,TsMj,TrMj)

/* end partitioning for the thermal problem */

plot(ThC,wait=0,cmm="Coarse mesh");

if (mpirank==0) plot(Thi,wait=0,cmm="cpu0 local mesh");

/* start defining the problem */

real T0=1,Tw=0;

/* fine space variables */

Whi [u,u1,p],[v,v1,q],[up,u1p,pp]=[0,0,0];

Qhi T,Q,Tp=0;

fespace Whnul(Thi,P2);

matrix Ai,ATi;

real eps=1e-10;

/* some useful macros */

macro grad(u) [dx(u),dy(u)] //EOM

macro div(u1,u2) (dx(u1)+dy(u2)) //EOM

/********************************************************************************

* Here starts the variational forms definition *

* Modifying the variational forms to get Navier-Stokes instead of Stokes *

* trying with Gr=20000, Pr=0.015 *

********************************************************************************/

real Gr=2.E4,Pr=0.015; /* Grashof and Prandtl numbers */

real dt=2.E-3,Time=1; /* time step and simulation length */

varf vPb([u1,u2,p],[v1,v2,q])=

int2d(Thi)(grad(u1)'*grad(v1) + grad(u2)'*grad(v2))

+ int2d(Thi)(-div(u1,u2)*q-div(v1,v2)*p+1.E-9*p*q)

+ int2d(Thi)(([u1,u2]'*[v1,v2])/dt)

+ int2d(Thi)(sqrt(Gr)*([up,u1p]'*grad(u1))*v1+([up,u1p]'*grad(u2))*v2)

+ on(10,u1=0,u2=0)

+ on(4,u1=0,u2=0)

+ on(3,u1=0,u2=0)

+ on(2,u1=0,u2=0)

+ on(1,u1=0,u2=0)

;

varf vPbbc([u1,u2,p],[v1,v2,q])=

//int2d(Thi)(convect([up,u1p],-dt,up)*v1/dt+convect([up,u1p],-dt,u1p)*v2/dt)+

int2d(Thi)(([up,u1p]'*[v1,v2])/dt)+

int2d(Thi)(sqrt(Gr)*Tp*v2)

+ on(1,2,3,4,u1=0,u2=0)

;

/* repeating the definition for the coarse space problem */

varf vPbC([u1,u2,p],[v1,v2,q])=

int2d(ThC)(grad(u1)'*grad(v1) + grad(u2)'*grad(v2))

+ int2d(ThC)(-div(u1,u2)*q-div(v1,v2)*p+1.E-9*p*q)

+ int2d(ThC)(([u1,u2]'*[v1,v2])/dt)

+ int2d(ThC)(sqrt(Gr)*([up,u1p]'*grad(u1))*v1+([up,u1p]'*grad(u2))*v2)

- int2d(ThC)(([up,u1p]'*[v1,v2])/dt)

+ int2d(ThC)(-sqrt(Gr)*Tp*v2)

+ on(4,u1=0,u2=0)

+ on(3,u1=0,u2=0)

+ on(2,u1=0,u2=0)

+ on(1,u1=0,u2=0)

;

/* this is to mark the internal boundaries */

varf vPbon10([u1,u2,p],[v1,v2,q])=on(10,u1=1,u2=1)+on(1,2,3,4,u1=0,u2=0);

varf vthermal(T,Q) =

int2d(TThi)(1/dt*(T*Q)

+1/Pr*(grad(T)'*grad(Q)))

// convective term (in the case of finite differences)

+ int2d(TThi)(sqrt(Gr)*([up,u1p]'*grad(T))*Q)

+ on(1,3,T=x)

+ on(4,T=Tw)

+ on(2,T=T0)

+ on(10,T=0)

;

varf vthermalbc(T,Q) =

int2d(TThi)(1/dt*(Tp*Q))

+ on(1,3,T=x)

+ on(4,T=Tw)

+ on(2,T=T0)

+ on(10,T=0)

;

/* repeating for the coarse space */

varf vthermalC(T,Q) =

int2d(ThC)(1/dt*(T*Q)

+1/Pr*(grad(T)'*grad(Q)))

// convective term (in the case of finite differences)

+ int2d(ThC)(sqrt(Gr)*([up,u1p]'*grad(T))*Q)

- int2d(ThC)(1/dt*(Tp*Q))

+ on(1,3,T=x)

+ on(4,T=Tw)

+ on(2,T=T0)

;

varf vthermalon10(T,Q)=on(10,T=1)+on(1,2,3,4,T=0);

u[]=vPb(0,Whi,tgv=1);

real[int] onG10=vPbon10(0,Whi); // on 1 on 10

real[int] Bi=vPbbc(0,Whi);//Bi(Whi.ndof)

Ai=vPb(Whi,Whi,solver=sparsesolver);

T[]=vthermal(0,Qhi,tgv=1);

real[int] TonG10=vthermalon10(0,Qhi); // on 1 on 10

real[int] BTi=vthermalbc(0,Qhi);//BTi(Whi.ndof)

ATi=vthermal(Qhi,Qhi,solver=sparsesolver);

DDMDeffuncAndGlobals(NavierStokes2,comm,jpart,Whi,Vhc,3,Ai,vPbC,onG10,Pii,Usend,Vrecv,[0,1,-1],VhC,sMj,rMj)

DDMDeffuncAndGlobals(Thermal2,comm,Tjpart,Qhi,Qhc,1,ATi,vthermalC,TonG10,TPii,Tsend,Qrecv,[0],QhC,TsMj,TrMj)

vdebug=0; // if 1 plots u (only horizontal component of velocity) after the solution is found

int nsteps=Time/dt;

for (int j=0;j<nsteps;j++){ /* here begins time cycle */

ittt=0;

eps=1.e-10;

/* only the variational form vPb changes at each iteration because of the update of up[]

ATi does not change: convective term is on rhs */

Ai=vPb(Whi,Whi,solver=sparsesolver);

real[int] Bi=vPbbc(0,Whi);

NavierStokes2DDMSolver(Bi,u,v,gmres,eps,vdebug)

eps=1.e-10;

/* now the thermal problem */

ATi=vthermal(Qhi,Qhi,solver=sparsesolver);

real[int] BTi=vthermalbc(0,Qhi);

Thermal2DDMSolver(BTi,T,Q,gmres,eps,vdebug)

if (mpirank==0) plot([u,u1],T,fill=0,value=1,cmm="cpu0, Time="+j*dt);

/* update the solutions: */

[up,u1p,pp]=[u,u1,p];

Tp=T;

} /* end of time cycle */

/* saving the results in vtk format */

savevtk("NavierStokes"+mpirank+".vtk",Thi,[u,u1,0],order=ffordervel,dataname="Velocity");

savevtk("Thermal"+mpirank+".vtk",TThi,T,order=ffordervel,dataname="Temperature");